How are our eyes structured and how do they work?

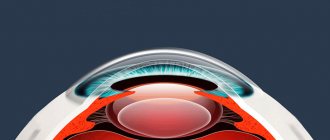

The human visual organs have a complex structure. It is thanks to the ability to see that we perceive up to 90% of information about the world around us. A person can distinguish millions of shades, and is also endowed with binocular vision and is able to determine the size of an object, the distance to it, and correlate surrounding objects by size. In addition, our eye can change the focus for vision at long and near distances - this is called accommodation, regulate the amount of light entering it, correct chromatic and spherical aberrations, etc. How does the process of image perception occur? The light beam reflected from surrounding objects passes through the transparent convex hemisphere of the anterior part of the eye - the cornea. It then hits the pupil, a hole located in the center of the iris. It is thanks to the ability of the pupil to contract or dilate that the human eye can adapt to lighting of varying intensities.

Next, the beam passes through the lens, whose function is to refract and focus the image on the retina. It also plays a critical role in accommodation - changing its curvature to ensure visual acuity at long and near distances. Thanks to this unique organ, a person with normal vision can easily see both the stars in the night sky and the small print in a book. And then the light beam, refracted by the lens and focused, reaches the retina. This is a complex ocular structure, pathologies of which lead to irreversible loss of vision. The retina contains approximately 137 million different photoreceptors, capable of processing up to 10 billion photons. It is on the retina that the image is formed, but it is smaller than its true size and is also turned upside down. Thanks to the work of photoreceptors, light rays are transformed into electrical impulses, overcome nerve fibers and are transmitted through the optic nerve to certain parts of the brain. In this case, each eye perceives the visible image separately, but the brain combines them into a single whole, forming a familiar picture.

Peculiarities of perception of light rays by humans and animals

Light is electromagnetic radiation, high-frequency waves, of which a person perceives only a certain frequency range. We see primary sources of light, such as the sun, fire. Secondary transparent sources that reflect light (such as air or glass) remain invisible to humans.

The range from 400 to 790 terahertz is the coverage that the human eye can discern. People do not see ultraviolet and infrared radiation. In turn, animals, as well as fish, are able to “see” UV, the range of which is in the region of 400 nm. This feature allows them to survive in the most difficult conditions, hunt, and protect themselves from predators.

Unlike ultraviolet, most animals cannot see infrared rays. The exception is those representatives of the fauna, whom nature has “rewarded” with special receptors located in different parts of the body. A person can recognize infrared rays only using special equipment - thermal imagers.

Why does the image appear upside down on the retina?

Let's consider this phenomenon in more detail. Why does the normal image we see appear on the retina upside down? It is known from a physics course that light rays are refracted as they pass through a curved surface, and the image on the reverse side becomes inverted. The visual organs contain two natural refractive lenses - the cornea and the lens - through which light rays pass before hitting the retina. But they are refracted three times.

The first refraction occurs when light crosses the cornea - the visible image is reversed. The beam then reaches the lens, which is a biconvex lens. When passing through its first surface, the image is again inverted into its usual form, and during the next refraction on the rear convex part of the natural lens, it is again inverted and in this inverted form enters the retina.

After the triple inversion, a complex process occurs in which the retinal cells convert the received information into electrical impulses, which are transmitted along the optic nerve to special analyzer sections of the brain. They form the image we are familiar with: the sky is above and the earth is below. This process occurs instantly. An experiment conducted by neuroscientists from the University of Massachusetts showed that the human brain is capable of processing an image in at least 13 milliseconds. Participants had to give a signal when they saw certain scenes, for example, a car or a still life, among pictures changing at a speed of 13-80 milliseconds. Scientists believe that this ability to quickly process information helps us select objects for consideration. The eyeballs are capable of moving their position at a speed of up to 3 movements per second, during which time the brain must identify all the information in the field of vision, realize what it saw and make a decision where to look next.

Module theory

Figure 11. Random dot stereograms by Béla Zhules, floating square

The second starting point in Marr's research (following Warrington's work) is the assumption that our visual system has a modular structure. In computer parlance, our main Vision program covers a wide range of subroutines, each of which is completely independent of the others, and can operate independently of other subroutines. A prime example of such a routine (or module) is stereoscopic vision, in which depth is perceived as the result of processing images from both eyes that are slightly different images from each other. Previously, it was believed that in order to see in three dimensions, we first recognize entire images, and then decide which objects are closer and which are farther away. In 1960, Bela Julesz, who was awarded the Heineken Prize in 1985, was able to demonstrate that spatial perception in the two eyes occurs solely by comparing small differences between two images obtained from the retinas of both eyes. Thus, one can feel depth even where there are no objects and no objects are supposed to be present. For his experiments, Jules came up with stereograms consisting of randomly located dots (see Fig. 11). The image seen by the right eye is identical to the image seen by the left eye in all respects except for the square central area, which is cropped and offset slightly to one edge and again aligned with the background. The remaining white space was then filled with random dots. If the two images (in which no object is recognized) are viewed through a stereoscope, the square that was previously cut out will appear to be floating above the background. Such stereograms contain spatial data that is automatically processed by our visual system. Thus, stereoscopy is an autonomous module of the visual system. Module theory has proven to be quite effective.

How does a newborn actually see objects?

It is a common belief that infants see the world around them upside down. This is only partly true. In fact, in the first 30-50 days, a child's vision is very imperfect. His eyeball is slightly flattened, the retina is still developing, and the macula, which is responsible for central vision, is still missing. The baby can only distinguish between light and dark spots. For example, if you light a lamp in a dark room, a newborn will only be able to recognize a halo of light, but nothing more. Everything else seems blurry to him.

The brain's ability to correct the image transmitted by the eye requires experience. But since the baby is not yet able to focus his gaze and see objects clearly, then, in fact, he has nothing to turn over. By two months of life, the light sensitivity of the retina increases almost fivefold, the oculomotor muscles are strengthened, objects acquire their contours, although they are still visible only in two dimensions - length and width. The child already shows interest in them, reaches out with his hand, and accordingly learns to distinguish between up and down.

Lecture by Elizabeth Warrington

In 1973, Marr attended a lecture by British neurologist Elizabeth Warrington. She noted that a large number of patients with parietal lesions of the right side of the brain whom she examined could perfectly recognize and describe a variety of objects, provided that these objects were observed by them in their usual form. For example, such patients had little difficulty identifying a bucket when viewed from the side, but were unable to recognize the same bucket when viewed from above. In fact, even when they were told that they were looking at the bucket from above, they flatly refused to believe it! Even more surprising was the behavior of patients with damage to the left side of the brain. Such patients typically cannot speak and therefore cannot verbally name the object they are looking at or describe its purpose. However, they can show that they correctly perceive the geometry of an object regardless of viewing angle. This prompted Marr to write the following: “Warrington’s lecture pushed me to the following conclusions. First, the idea of the shape of an object is stored in some other place in the brain, which is why ideas about the shape of an object and its purpose are so different. Secondly, vision itself can provide an internal description of the shape of an observed object, even if that object is not recognized in the usual way... Elizabeth Warrington pointed out the most essential fact of human vision - it tells about the shape, space and relative position of objects.” If this is indeed the case, then scientists working in the fields of visual perception and artificial intelligence (including those working in computer vision) will have to trade the detector theory from Hubel's experiments for an entirely new set of tactics.

Can a person learn to see the world upside down?

This question interests many people. The first such experiment on this topic was carried out by the American psychologist D.M. Stratton. In 1896, he created an invertoscope - an optical device that straightens an inverted image on the retina. Using an invertoscope allows you to see the world around you upside down. The first experiments showed that a person adapts to this perception after a few days. After about three days, the disorientation decreased, and on the eighth day of the experiment, new hand-eye coordination developed. After the invertoscope was removed from my eyes, the normal world seemed unusual, and again it took some time to adapt. Moreover, such an ability was recorded only in humans - a similar experiment with a monkey led it to complete apathy, and only a week later it began to gradually react to strong stimuli, while remaining almost motionless.

In modern practice, the invertoscope is used to conduct various experiments in the field of psychology. Sometimes it is used for astronauts and sailors to train the vestibular system and prevent seasickness.

From image to data processing

David Marr of the MIT Artificial Intelligence Laboratory was the first to approach the subject from a completely different angle in his book Vision, published after his death. In it, he sought to examine the main problem and suggest possible ways to solve it. Marr's results are of course not final and are still open to research from different directions, but nevertheless the main advantage of his book is its logic and consistency of conclusions. In any case, Marr's approach provides a very useful basis on which to build studies of impossible objects and dual figures. In the following pages we will try to follow Marr's train of thought.

Marr described the shortcomings of the traditional theory of visual perception as follows:

“Trying to understand visual perception by studying only neurons is like trying to understand the flight of a bird by studying only its feathers. This is simply impossible. To understand bird flight we need to understand aerodynamics before the structure of feathers and the different shapes of a bird's wings have any meaning to us." In this context, Marr credits JJ Gobson as the first to address important issues in According to Marr, Gibson's most important contribution was that "the most important thing about the senses is that they are information channels from the external world to our perception (...) He posed the critical question - How each of us obtains the same results when perceiving in everyday life under constantly changing conditions? This is a very important question, showing that Gibson correctly viewed the problem of visual perception as reconstructing from sensory information the “correct” properties of objects in the external world." And thus we have achieved areas of information processing.

There should be no question that Marr wanted to ignore other explanations for the phenomenon of vision. On the contrary, he specifically emphasizes that vision cannot be satisfactorily explained from only one point of view. Explanations must be found for everyday events that are consistent with the results of experimental psychology and all the discoveries in this field made by psychologists and neurologists in the field of the anatomy of the nervous system. When it comes to information processing, computer scientists would like to know how the visual system can be programmed, which algorithms are best suited for a given task. In short, how vision can be programmed. Only a comprehensive theory can be accepted as a satisfactory explanation of the vision process.

Marr worked on this problem from 1973 to 1980. Unfortunately, he was not able to complete his work, but he was able to lay a solid foundation for further research.